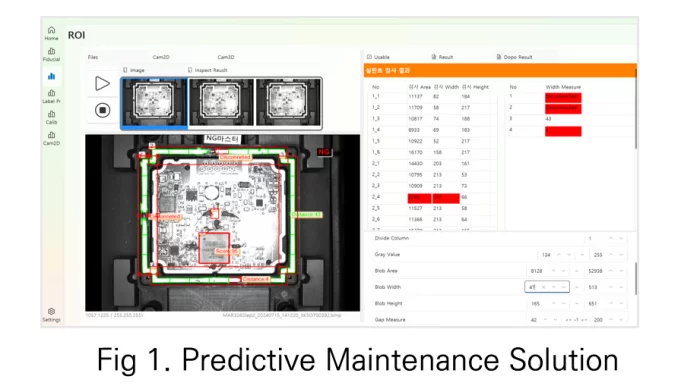

LLM-Based Predictive Maintenance Solution

LLM-based solution for early anomaly detection and defect prevention

Description

More Products & Services

Products & Services

Driver Monitor System

Opt-AI Inc.

Our in-vehicle monitoring solution applies VLM-powered indoor and outdoor cameras to deliver real-time driver and passenger awareness. By integrating AI models such as facial landmark and head pose detection, gaze tracking, object recognition, and human pose estimation, the system supports safety features like drowsiness detection, distraction monitoring, and surrounding object awareness.

Optimized for automotive SoCs including Qualcomm, and running on QNX RTOS and Linux, our solution combines model compression, embedded optimization, and a streamlined development pipeline. This ensures reliable, low-latency performance tailored for next-generation connected and intelligent vehicles.

Optimized for automotive SoCs including Qualcomm, and running on QNX RTOS and Linux, our solution combines model compression, embedded optimization, and a streamlined development pipeline. This ensures reliable, low-latency performance tailored for next-generation connected and intelligent vehicles.

Edge-Optimized Object Detection & Tracking Solution

Opt-AI Inc.

We developed and optimized an object detection and tracking solution tailored for edge environments using Intel Atom platforms with OpenVINO. The system combines MobileNetV3-SSDLite for detection and MobileNetV3-Small for tracking, enabling efficient real-time performance on resource-constrained devices.

Through model optimization and inference acceleration, we achieved over 2x improvement in FPS and more than 50% enhancement in inference speed, all while maintaining detection and tracking accuracy. This demonstrates the solution’s suitability for lightweight, low-power edge AI applications

Through model optimization and inference acceleration, we achieved over 2x improvement in FPS and more than 50% enhancement in inference speed, all while maintaining detection and tracking accuracy. This demonstrates the solution’s suitability for lightweight, low-power edge AI applications

On-device LLM Solution

Opt-AI Inc.

OptAI’s on-device LLM solution optimizes and compresses models for mobile NPUs such as Qualcomm Snapdragon and Samsung Exynos, enabling a broad spectrum of AI services including automatic note generation, real-time translation, question answering, and personal assistant features. Free from cloud dependency, it delivers seamless performance with high efficiency and ultra-low latency. With OptAI, your device evolves into a truly intelligent and personalized AI companion.

On-Device AI Detection Solution for Ships and Aircraft

Opt-AI Inc.

In collaboration with Hanwha Systems, we developed and optimized a Transformer-based AI model for ship and aircraft detection on embedded platforms. Targeting NVIDIA Jetson Orin AGX, the project utilized the MSAR (640×640) dataset along with large-scale training databases built from public sources.

The RT-DETR model, currently under review, was optimized through hardware-friendly design, Int8 quantization, and specialized inference acceleration. As a result, the solution achieved up to 5x faster inference speed while maintaining accuracy, reaching 20% of NVIDIA TensorRT performance. This demonstrates the ability to deliver reliable, real-time detection capabilities in resource-constrained embedded environments, enabling next-generation defense and surveillance applications.

The RT-DETR model, currently under review, was optimized through hardware-friendly design, Int8 quantization, and specialized inference acceleration. As a result, the solution achieved up to 5x faster inference speed while maintaining accuracy, reaching 20% of NVIDIA TensorRT performance. This demonstrates the ability to deliver reliable, real-time detection capabilities in resource-constrained embedded environments, enabling next-generation defense and surveillance applications.

Description

This solution leverages LLM-based data interpretation technology to detect process anomalies in advance, providing alerts and countermeasures before defects occur, thereby ensuring sufficient response time. It also analyzes issues such as positional misalignment or print degradation patterns during the process, matches them with various event timelines, identifies the root causes of defects, and delivers the findings in the form of analytical reports

Share

Recent Chats

Share via email

Future: handle WhatsApp here

Future: handle LinkedIn here

Future: handle Twitter here

SUBMENU HERE

Share via Chat

Copy Link